Data Scientist Tutorial¶

This section takes the first time user through the DKube workflow using a sample program and dataset. The MNIST model is used to provide a simple, successful initial experience.

General Workflow¶

The workflow demonstrated in this example is as follows:

Load the program folder as a DKube Project

Load the dataset folder as a DKube Dataset

Create a model placeholder for versioned output

Create and open a DKube JupyterLab Notebook

Create a Training Run

Test Model Inference

Create Project¶

Load the MNIST program folder from a GitHub repository into DKube from the Repo menu by selecting “+ Project”.

The fields should be filled in as follows, then select “Add Project”.

Field |

Value |

|---|---|

Name |

mnist |

Project Source |

Git |

url |

|

Branch |

2.0 |

This will create the mnist Project.

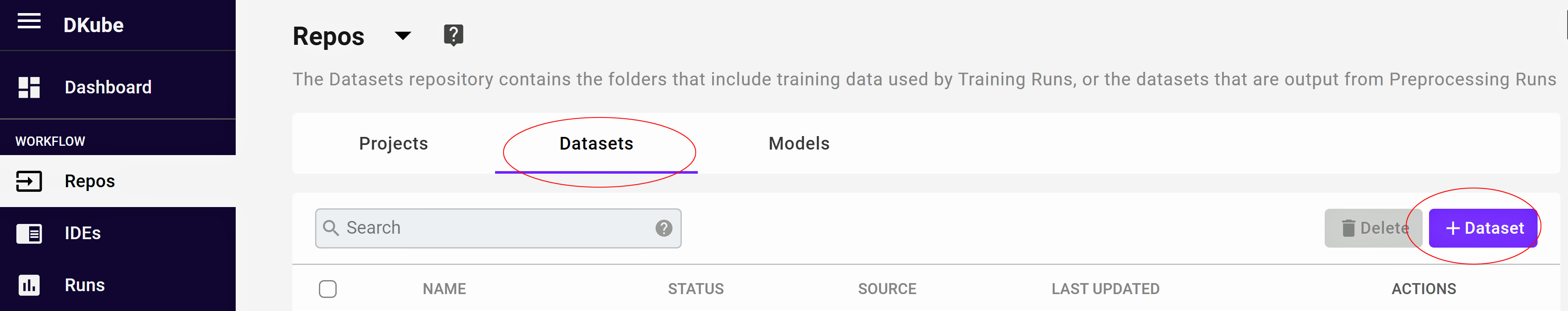

Create Dataset¶

Load the MNIST dataset folder from a GitHub repository into DKube from the Datasets menu by selecting “+ Dataset”.

The fields should be filled in as follows, then select “Add Dataset”.

Field |

Value |

|---|---|

Name |

mnist |

Dataset Source |

Git |

url |

|

Branch |

2.0 |

This will create the mnist Dataset.

Create Model¶

A Model needs to be created that will become the basis of the output of the Training Run later in the process.

The fields should be filled in as follows, then select “Add Model”.

Field |

Value |

|---|---|

Name |

mnist |

Versioning |

DVS |

Model Store |

default |

Model Source |

None |

Create Notebook¶

Create a JupyterLab Notebook from the IDE menu to experiment with the program by selecting “+ JupyterLab”.

Fill in the fields as shown.

Basic Submission Screen¶

Field |

Value |

|---|---|

Name |

mnist |

All the other fields should be left in their default state. No not submit at this point. Select the “Repos” tab.

Repo Submission Screen¶

Field |

Value |

|---|---|

Project |

mnist |

Field |

Value |

|---|---|

Dataset |

mnist |

Version |

Select ver 1 |

Mount Path |

/opt/dkube/input |

The mount path is the path that is used within the program code to access the input dataset.

All the other fields should be left in their default state. Select “Submit” to start the Notebook.

Note

The initial Notebook will take a few minutes to start. Follow-on Notebooks with the same framework version will start more quickly.

While on this screen, start the default DKube notebook instance by selecting the “Start” icon.

Open JupyterLab Notebook¶

Open a JupyterLab notebook by selecting the Jupyter icon under “Actions” on the far right.

Create Training Run¶

Create a Training Run from the Runs menu to train the mnist model on the dataset and create a trained model.

Fill in the fields as shown.

Basic Submission Screen¶

Field |

Value |

|---|---|

Name |

mnist |

Start-up script |

python model.py |

All the other fields should be left in their default state. Select the “Repos” tab.

Repos Submission Screen¶

In order to submit a Training Run:

A Project and Dataset need to be selected for input

A Model needs to be selected for output

Input Selections¶

Field |

Value |

|---|---|

Project |

mnist |

Field |

Value |

|---|---|

Dataset |

mnist |

Version |

Select ver 1 |

Mount Path |

/opt/dkube/input |

The mount path is the path that is used within the program code to access the input dataset.

Output Selection¶

A Model needs to be selected for the Training Run output.

Field |

Value |

|---|---|

Model |

new-model |

Version |

Select ver 1 |

Mount Path |

/opt/dkube/output |

The Mount Path corresponds to the path within the Program code where the output model will be written. After the fields have been completed, select “Submit”.

Note

The initial Run will take a few minutes to start. Follow-on Runs with the same framework version will start more quickly.

The Training Run will appear in the “All Runs” tab.

Create Test Inference¶

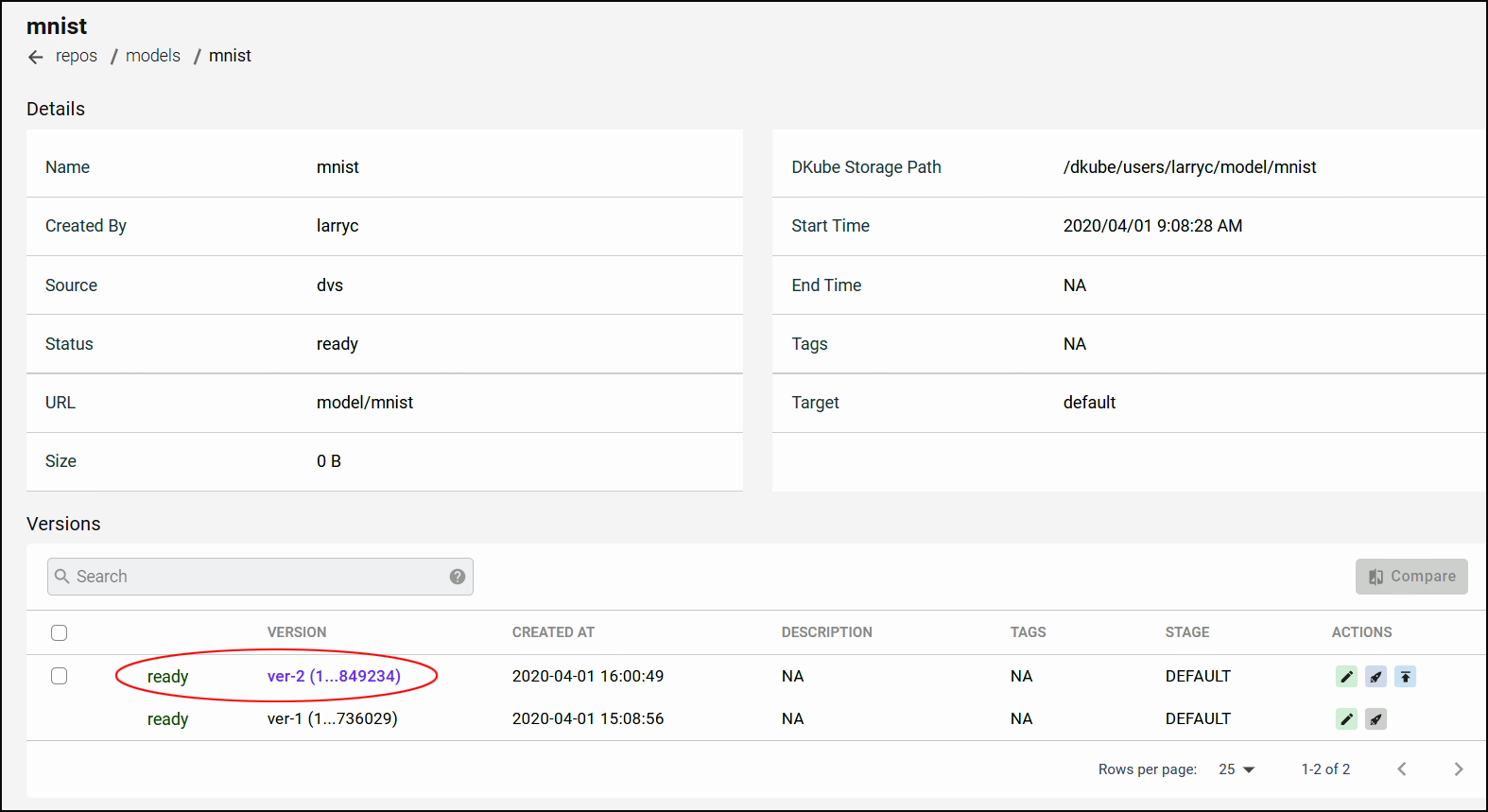

Once the Run status shows “Complete”, it indicates that a trained Model has been created. The trained Model will appear in the Models Repo.

Selecting the trained Model will provide the details on the model, including the versions.

Ver 1 of the model is the initial blank version that was created earlier in the tutorial in order to set up the versioning capability

Ver 2 is the new model that was created by the training run

While on this screen, create a test inference for the model using the “Test Inference” button. This will show a pop-up window, where you can enter the inference name, and select whether to use a CPU or GPU inference.

Field |

Value |

|---|---|

Name |

mnist |

CPU/GPU |

CPU |

Preprocessing |

Select |

Docker Image url |

ocdr/mnist-example-preprocess:2.0.4 |

The lineage for the model can viewed by selecting the “Lineage” tab. This shows all of the inputs that were used to create the model.

The test inference is viewed from the “Test Inferences” menu. Once the status of the test inference shows “Running”, the “Endpoint” column provides the API that is serving the model.

Test the Trained Model¶

A Model can be tested for inference accuracy. This is accomplished from the special DKube Notebook started previously in this tutorial.

After Opening JupyterLab for the DKube notebook, navigate to the folder tools and select the dkube.html file. This will show a window with several applications.

Before choosing any application, select the Trust HTML button at the top of the JupyterLab window. The mode toggles, so selecting it will instruct JupyterLab to allow the application to be opened.

After this step, select the “DKube Inference** application by right-clicking and opening a new tab.

This will open a tab that contains the test inference application. Fill in the fields as follows:

Field |

Value |

|---|---|

Model Serving url |

Endpoint API from the Inferences screen highlighted in the previous section |

Authorization Token |

OAuth token from the Developer Settings menu at the top right of the screen |

Model Type |

mnist |

Upload Image |

As described below |

The test image can be downloaded to your local workstation from https://oneconvergence.com/guide/downloads/3.png and used in the Upload Image field.